This semester saw an inter-department collaboration project between the UX department and the team behind UNC Libraries’ digital scholarly depository, known as the Carolina Digital Repository (CDR). The CDR team manages cdr.lib.unc.edu, where UNC affiliates may deposit scholarly work in an open access, fully searchable Web space.

The project, completed during the spring semester of 2016, was to conduct user research initiatives for the CDR and assess cdr.lib.unc.edu according to well-known usability heuristics. Specifically, this meant

- Adding links to an online survey throughout the CDR Web space

- One-on-one interviews with CDR users around campus

- Heuristic evaluation of cdr.lib.unc.edu by the UX department

Ultimately, the goal of the project was to introduce user research methods that the CDR could use on their own in the future. Further collaboration between the CDR and UX departments will ideally build on insights gained during this semester.

Online survey

Surveys are a basic, standard research method to collect information on user behavior, demographics, impressions, and preferences. Making a simple survey and promoting it to users throughout their experience with the CDR was the first step toward building internal user research initiatives.

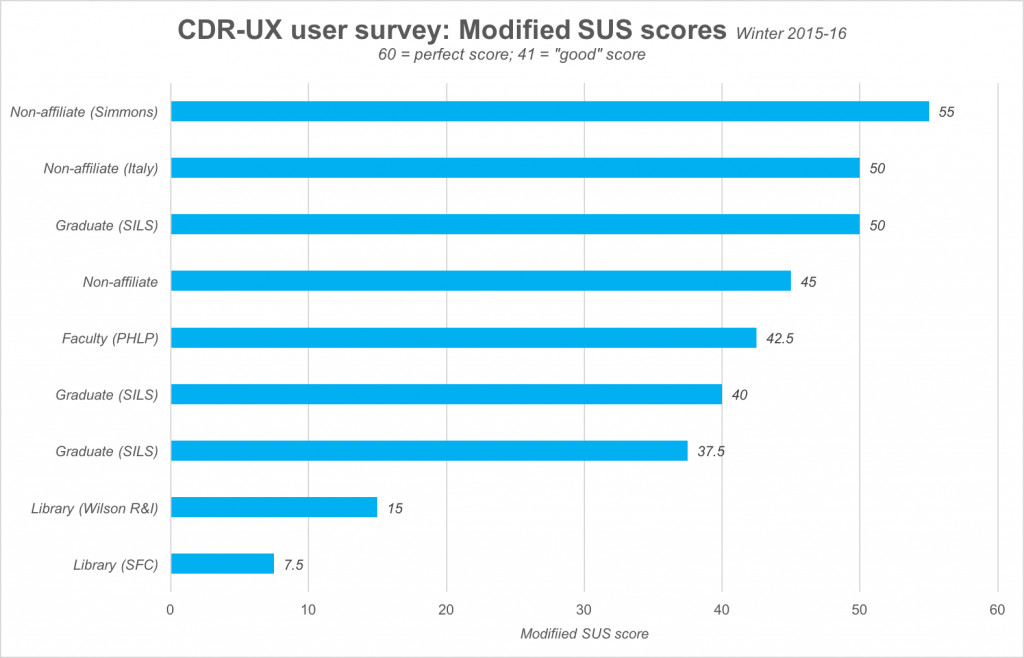

Our survey asked basic demographics, to determine who CDR users are, as well as some questions from the System Usability Scale (SUS), to determine how usable they think the CDR is.

Demographic questions included

- Which group best describes you? (Undergrad, grad, faculty, etc.)

- What is your department/school/major?

- Briefly, why did you come to the CDR today?

- Could you tell us any general comments you have about the CDR

The System Usability Scale (SUS) is an established Likert-scale survey for measuring user perceptions of usability. The SUS is normally 10 questions long, but to prevent survey fatigue, we only used 6 questions. The SUS is also only usable with participants that are familiar with the system you are testing, e.g. a good time to give it is right after a usability test. Since the survey was promoted through simple links throughout the site, we could not guarantee that survey-takers were familiar with the CDR or if they were brand new users. Because of this only a handful of the most general questions from the SUS were used.

We created the chart above of early results to show the CDR staff in one of our first meetings to discuss results. Generally with the SUS a 68 is considered an “average” score (SUS scores are not percentages!). For our modified SUS, this meant that 41 was an “average” or “good” score. Most scores were above or pretty close to average, but scores from librarians who took the survey were markedly lower.

User interviews

Interviews allow practitioners to talk face-to-face with users of their system. While interviews are typically less clinical and more personable than a survey or formal lab test, they can’t tell you everything: As per Nielsen’s recommendations on user interviews, they are only useful for getting the general attitudes or approaches users may have toward solving a given problem. In other words, do not expect users to be designers!

Our survey asked participants for email addresses if they were interested in communicating about the CDR further. From these we were able to schedule several user interviews on campus.

Heuristic evaluation

Heuristic evaluation is a non-empirical method of evaluation where experienced practitioners assess a system according to how well it meets criteria from an established set of usability heuristics. The UX department conducted a heuristic evaluation of the CDR with a team of 3 people using Nielsen’s 10 heuristics for user interfaces. Each person evaluated the CDR using the heuristics on their own, taking notes of potential usability problems. More about the process of heuristic evaluation is covered in detail by Nielsen.

Two meetings took place afterwards where each person’s notes were combined to form a final report that was then forwarded to the CDR. Results were later discussed in an inter-department meeting between the CDR and UX.

Then end result was an actionable list of potential usability problems for the CDR team to consider. While heuristic evaluation does not necessarily suggest concrete design changes, it typically uncovers potential usability problems that design changes could avoid.

Next steps

The CDR now has a higher degree of insight into who their users are, what their needs are, as well as potential usability problems that might come from the site’s current design. Further research and assessment can be carried out, some of it by the CDR staff themselves. One of our departmental goals is to empower other UNC Libraries staff to continue these initiatives themselves.

The UX department will still act as a point of contact in the future for projects from the CDR that might require further inter-department collaboration. Other library departments might also approach the UX department for similar work in the future. The department will work on this “client model” for projects within UNC Libraries so that librarians and staff are able to make better user-centered decisions on their own.

Contributions

This project was completed by:

Daniel Pshock, User Experience CALA

Chad Haefele, Interim Head of User Experience

Sarah Arnold, Instructional Technology Librarian

With assistance from CDR staff:

Mike Daines, Digital Repository Analyst

Ben Pennell, Applications Analyst

Hannah Wang, CDR CALA