Back in March, the UX Department had the pleasure of attending an ASERL webinar about on-the-fly usability testing lead by our Duke University Libraries colleague, Emily Daly. In her talk, Emily describes a method for doing quick usability testing on targeted sections of the library’s website. The method involves setting up in a high trafficked area of the library and asking passersby to perform a few tasks for a small incentive such as coffee or a gift card. At the end of May, we were inspired to try out this out in both Davis Library and the Undergraduate Library.

Background

Throughout the library, we’ve been having conversations about the discoverability of our resources. Combine these conversations with a lack of reliable usage statistics and an ongoing e-resources transition project, we wanted to assess how easily, or not, users are able to find known items through the various searches on our library site. We also wanted to learn a bit more about how our users research on their own — what types of sources they look for, where they look for them, and how they prefer to access them.

Methods

We set up our testing laptop near the entrances to the Undergraduate Library and Davis Library with signs pointing our way. We did not actively try recruiting participants beyond having the signs on display. We had one note taker and one moderator for each test except the last 2 when only one of us was available to perform the tests.

Based on the needs discussed above, we developed 5 known-item tasks and 1 open-ended question about the last research project the user worked on. The 5 known-item tasks asked participants to find a book, a journal article, an ebook, a streaming movie, and a database. We included a warm-up question in which users were asked for demographic information and any general experiences they’ve had using our site. We also asked for any final thoughts or questions each user had after completing all of the tasks.

Since we took turns moderating, we developed a test script to follow as we lead each participant through the tasks. This script also ensured that we shared the same information with each participant on what we were doing and why. We borrowed from Steve Krug’s recommended usability testing script.

Participants

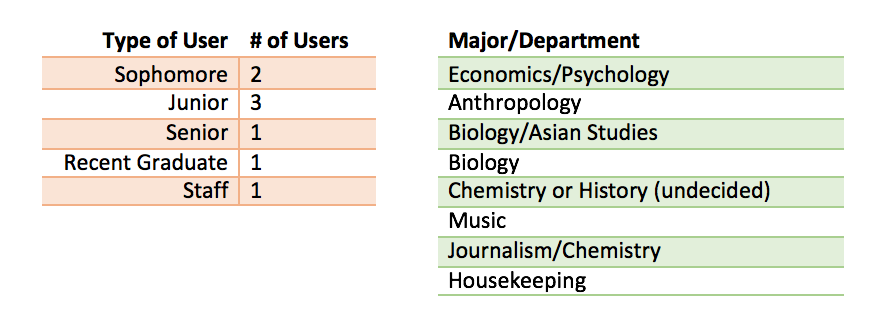

Right: Majors/department of participants.

Despite it being summer, we were able to recruit 8 participants in a relatively short amount of time over the course of 2 days. The last 2 participants were asked to perform only tasks 1 and 2. As displayed in the above tables, we spoke to 2 sophomores, 3 juniors, 1 senior, 1 recent graduate, and 1 staff member. This small group represented a wide swath of disciplines with a heavy emphasis on the sciences.

Findings

The following is an assessment of our findings by each task.

Task 1: Find the book Being Mortal: Medicine and What Matters in the End by Atul Gawande

Of the 8 participants, 6 used the main search bar on our library home page to look for the book. Five of them successfully found the book on their first search while 1 didn’t find it. The other 2 used the catalog and the browser’s address bar which performed a Google search respectively. Of these two, 1 found the book via the catalog and 1 didn’t find it.

Since the title we chose is UNC’s summer reading book, most of the copies were currently checked out. We asked some of the participants how they would go about getting a copy it. One commented that it is frustrating having to go between libraries. Another stated that he’d look for an ILL option, but for on campus, a service he wasn’t sure existed.

Task 2: Find the online journal article “Musical Sound Quality in Cochlear Implant Users…” by Patpong Jiradejvong, et al.

Three of the 8 participants used the main search bar again and were all successful at finding the journal article. Another 3 users went directly to Articles+, our discovery service powered by Serials Solutions, which also proved to be successful. One participant accessed the article via a Google search in the browser’s address bar. She successfully found and accessed the article, but only because we were on campus and she didn’t need to authenticate.

The remaining participant accessed JSTOR since he’d been told it was the largest collection of journal articles available on campus by a professor. He tried multiple combinations of the title and authors’ names, but was unsuccessful. At this point, he returned to the library’s home page and used the main search bar, which proved to be a successful strategy at finding the article. He did state that he understands the concept behind the main search bar and Articles+ on its own, but prefers the cleaner presentation of information in JSTOR.

We asked 5 out of the 8 participants what format they prefer for online articles. All expressed a preference for downloaded PDFs. One participant stated he preferred to read an article in full via PDF, but would use the HTML version and ctrl+F if looking for a specific phrase for a paper he was writing.

Task 3: Find the eBook Beginning App Development with Parse and Phonegap by Wilkins Fernandez

Of the 6 participants who were asked to complete this task, 5 used the main search bar again and successfully found the ebook. The sixth participant used the library’s catalog, and was also successful at finding it.

One of the participants commented that she had never specifically looked for an ebook, but had used them in the past while researching. Three out of the 6 stated they would use the “full text” link to access the ebook and then download a PDF if available.

Task 4: Find the streaming movie Hoop Dreams

Five out of the 6 participants successfully completes this task. Three used the main search bar again, while the other 3 used FilmFinder, a searchable database of the films available at UNC that pulls information from our catalog. The participant who didn’t successfully find the streaming version of Hoop Dreams did find the hardcopy of it.

The general comments from participants focused on being surprised at finding streaming film through FilmFinder and that they didn’t have to go to the Media Resources Center to access a film.

Task 5: Find the database ProQuest Dissertations & Theses

Databases proved to be trickier for our participants. Only half successfully found the database. Three of the participants used the main search box, and only 2 were successful. The 2 that were successful used the Best Bets option. The other successful participant accessed the database via the Music Library’s home page, which he navigated to using the Libraries & Hours page. He commented that it would be convenient to be able to access the branch library home pages via the main library site rather than the hours page.

The final 2 participants who did not successfully find the database used differing methods. One said that she would honestly just Google the database, which she proceeded to do. The other tried finding the database using the library’s E-Research by Discipline page, the main access point for our online databases. He was uncertain from that point what subject this particular database would fall under, and missed seeing it listed in the Frequently Used box to the right.

Task 6: Describe the last project/assignment that you worked on and how you started your research.

Regardless of their topic or type of resource they were searching for, 5 out of the 6 participants stated they began their research using the library’s main search bar. The sixth participant started with the Psychology subject page on the E-Research by Discipline tool since she was told to look for information in PsycInfo by her instructor.

Many of the participants described the nebulous process of research, beginning with a basic search that leads to various resources and other ideas or topics to followed up on. Eventually they became more precise in their searches and the resources used, honing in on specific subject-related databases like JSTOR or search tools like the library’s catalog.

Next Steps

Overall, the participants were successful at finding the known-items using our main search bar among other various resources and tools. We do have some room for improvement and directions to follow up on as we move forward such as reassessing the information available on our homepage and the layout of our E-Research by Discipline page.

The process of running these quick usability tests was painless and easy to set up. We do need to determine a better incentive to use in the future, but otherwise, this method of testing will be practical for us to do at least 2-3 times each semester.

Contributions

This project was completed by:

Chad Haefele, Interim Head of User Experience

Sarah Arnold, Instructional Technology Librarian

Grace Sharrar, UX Research Assistant